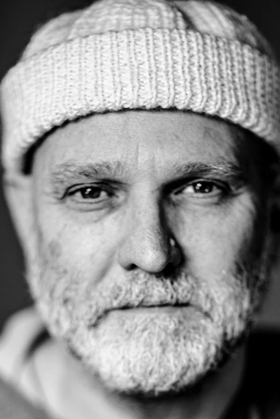

Rob Chandler of Morden Wolf explains why Unreal Engine 5 and nVidia’s 4000 series graphic card will revolutionise virtual production

Virtual production is a hot topic in the film and television industry, with programmes like Disney’s The Mandalorian showing off the capabilities of technology and techniques critics previously dismissed as glorified green screen. The use of LED stages and virtual production for filming has resulted in it becoming the fastest-growing area of visual effects and production technology today. Even giants like Sony are now wading in on the action, announcing its recent acquisition of VFX and virtual production company Pixomondo.

Enabling live footage to be mixed with computer graphics that can be tweaked to get real-time results, it’s easy to see the appeal of virtual production for filmmakers. The results? Agility, flexibility, the potential for limitless creativity at an affordable cost compared to traditional techniques. But given its accessibility, the use of virtual production extends beyond filmmakers and promises to disrupt content creation as we know it. It allows creators to point, shoot and record, explore 3D backgrounds in live-time, walking through spaces as if they were really there and even broadcast from within the metaverse including experiences like gigs.

Given other benefits like the environmentally friendly nature of virtual production and its applications in the metaverse, it’s clear to see it’s the future. Yet it hasn’t been free from criticism. Issues around how real sets look as well as considerable glitches have been highlighted as the main problem area, but many have highlighted that in order for virtual production to reach it’s full potential, filmmakers must gain a better understanding of the technology.

Problems of today will become problems of yesterday

The eagerly awaited release of video game CyberPunk 2077, and its creators’ ambitious attempt to take graphical fidelity to the new level highlights both the evolution in gaming towards photorealism and greater immersion, however while impressive, multiple issues plagued its launch. Lots of users GPU’s simply couldn’t keep up. Blending gaming technology with traditional filmmaking techniques, yet held to an even higher standard due to its application, virtual production has even greater pressure to exceed realness than games like Cyberpunk.

Indeed, gamey graphics have been a stumbling block for the wider adoption of virtual production. However, with nVidia’s 4000 series graphic card recently released and the launch of the Unreal Engine 5 (UE5.1), many of the problems of today will become problems of yesterday.

Changing the content production landscape one pixel at a time

According to nVidia, the latest series of graphics cards will provide computers with the ability to generate completely simuted, fully ray-traced worlds at the highest resolutions, with extraordinary levels of detail and outstanding performance. Paired with Unreal Engine 5, this is a groundbreaking development for virtual production, enabling the rendering of sophisticated graphics and the provision of interactive experiences and immersive virtual worlds.

Nanite (Unreal’s virtualised geometry system) will allow meshes to be directly imported and replicated, paired with the new shadow mapping system, this means a new degree of detail and realism can be delivered. What’s more, whereas past techniques like Percentage-Closer Filtering would excessively blur and lessen the effect of high-resolution geometry and shadows, the new Shadow Map Ray Tracing will enable more plausible soft shadows and contact hardening.

This means shadows produced by things further away will be softer than those cast by objects closer to the shadow-receiving surface, mimicking how shadows appear in real-life. There’s plenty more to get excited about including the lumen feature. Unreal Engine says it’s “a fully dynamic global illumination and reflections solution that enables indirect lighting to adapt on the fly to changes to direct lighting or geometry—for example, changing the sun’s angle with the time of day or opening an exterior door”.

Draw call constraints have also been reduced allowing for complex graphics but with less pressure on the CPU and thus the GPU. With nVidia’s GPU this is a game changer.

What does this mean for content producers? Shoot anywhere real or virtual without it looking like a game. One example would be the use of indistinguishable digital twins of locations. Scan the colosseum, with its worn and textured stone masonry, would have previously created huge lighting challenges for rendering but this is far less of a challenge now.

Having previously facilitated a live auction in the metaverse for luxury auctioneer dePury’s, the compatibility of Unreal’s MetaHuman framework with UE5’s new rigging, animations and physics features promises to help catapult this technology into the future with more lifelike visuals for all. This really is the cherry on top for greater immersion in virtual environments and greater difficulty in distinguishing what’s real and what’s not.

As the world becomes increasingly virtual, creators will be able to provide and monetise experiences like gigs and meet and greets using virtual production and upgraded tech, to satisfy demands for closeness and immersiveness that real-life experiences provide.

The future is virtual production

Already we’re seeing evolution in the quality of video production on platforms like TikTok, where popular influencer content and sometimes even user-generated content is becoming increasingly mistaken for a movie scene. Lots are using professional videographers at high costs to achieve results and cash in on the creator economy and lucrative partnerships, but for others this is simply unaffordable. Ultimately, it’s developments like this that will help entice creators to use virtual production and elevate their content affordably.

The future is digital and the future of film and content is virtual production.

Rob Chandler is founder and CEO of Morden Wolf

No comments yet